Introduction

Hello! These are my lecture notes for the computer science modules I took while at Warwick.

PLEASE NOTE

I make no guarantee that these notes are correct.

Coverage

This table shows the coverage for each module and how I'd rate the quality.

| Module | Coverage | Quality |

|---|---|---|

| CS118 | IEEE-754, robot maze reference | Ok |

| CS126 | All content, very brief | Ok |

| CS130 | All content, not checked for correctness | Low |

| CS131 | All content, not checked for correctness | Low |

| CS132 | Does not include multithreading and multicore systems, quite brief | Good |

| CS139 | Does not include basics of Python and JS, quite brief | Good |

| CS140 | All content, quite brief | Good |

| CS141 | All content | Good |

| CS241 | All content | Ok |

| CS257 | All content, quite brief | Ok |

| CS258 | All content | Good |

| CS259 | All content | Good |

| CS260 | Does not include P and NP or NP-completeness | Good |

| CS261 | All content, quite brief | Ok |

| CS262 | All content | Good |

| CS263 | All content, very brief | Ok |

| CS313 | All content, quite brief | Good |

| CS325 | Missing quite a lot of Optimisations and Code Generation | Ok |

| CS331 | All content | Good |

| CS349 | Missing everything after type inference and before imperative languages as well as random bits throughout | Ok |

| CS355 | All content | Good |

| CS435 | All content | Good |

CS118 - Programming for Computer Scientists

These notes do not cover basic programming in Java.

IEEE-754

IEEE-754 is the IEEE Standard for Floating-Point Arithmetic.

Floating point numbers can be used to efficiently store very large or very small decimal numbers, similar to standard form/scientific notation but for binary. In Java, these are the float and double types which use 32 and 64 bits respectively.

Floating point numbers consist of a sign bit, exponent and fraction.

- The sign bit is the most significant bit and denotes whether the number is positive (0) or negative (1).

- The exponent describes how much to shift the mantissa. In a 32-bit number it is the most significant 8 bits after the sign bit.

- The mantissa is a binary fraction. The most significant bit represents $2^{-1}$, the second $2^{-2}$ and so on. In a 32-bit number it is the remaining 23 bits.

The value of a floating point number is given by $$ (-1)^{\text{sign bit}} \times 1.(\text{mantissa}) \times 2^{\text{exponent} - \text{bias}} $$ For a 32-bit number the bias is 127, for 64-bit it is 1023.

Floating point to base 10

An example of a 32-bit floating point number is 00111110001000000000000000000000.

The sign bit is 0, the exponent is 01111100 and the mantissa is 01000000000000000000000.

The value of the mantissa is $2^{-2} = 0.25$. The exponent is 124.

The value of the number is therefore given by $(-1)^0 \times 1.25 \times 2^{124-127} = 1 \times 1.25 \times 2^{-3} = 0.15625$

Base 10 to floating point

Consider 38.125.

38.125 is 100110.001 in fixed point binary. Shifting right until the MSB is 1 (normalising) gives 1.00110001. The exponent is 5 as the number has been shifted 5 places to the right. We now have $38.125 = 1.00110001 \times 2^5$, which is of the form $1.(\text{mantissa}) \times 2^{\text{exponent} - \text{bias}}$.

Using 32-bit precision, the exponent is given by 127+5 = 132 = 1000 0100 and the mantissa is 00110001. The sign is 0 so the 32-bit precision floating point representation of 38.125 is 01000010000110001000000000000000.

Special values

These are the special values for a 32-bit precision floating point number.

| Exponent | Mantissa | Value |

|---|---|---|

| 0 | 0 | 0 |

| 255 | 0 | Infinity |

| 0 | not 0 | Denormalised |

| 255 | not 0 | Not a number |

Robot Maze Reference

robot methods

| Name | Arguments | Returns |

|---|---|---|

getRuns | None | int - Number of previous runs the robot has made of the current maze |

look | int - Direction in which to look | int - State of the maze square in the given direction |

face | int - Direction in which to face the robot | void |

setHeading | int - Heading in which to face the robot | void |

getHeading | None | int - Current heading of the robot |

getLocation | None | Point - The x and y coordinates of the robot in the maze |

getTargetLocation | None | Point - The x and y coordinates of the robot's target |

Headings

NORTH, EAST, SOUTH and WEST are referred to as headings.

Headings are used for setHeading and returned by getHeading.

| Name | Value |

|---|---|

NORTH | 1000 |

EAST | 1001 |

SOUTH | 1002 |

WEST | 1003 |

Directions

AHEAD, RIGHT, BEHIND and LEFT are referred to as directions.

Directions are relative to the robot's current heading. Directions are used for

look and face. CENTRE can be used as a null value.

| Name | Value |

|---|---|

AHEAD | 2000 |

RIGHT | 2001 |

BEHIND | 2002 |

LEFT | 2003 |

CENTRE | 2004 |

States

WALL, PASSAGE and BEENBEFORE are referred to as states that a

square in the maze can have. They are returned by look. PASSAGE

means an empty square that has not been visited yet, BEENBEFORE is an

empty square that has been visited.

| Name | Value |

|---|---|

WALL | 3000 |

PASSAGE | 3001 |

BEENBEFORE | 4000 |

Coordinates

Maze coordinates start in the top left corner with $(1,1)$ and finish in

the bottom right corner. The default maze is $15 \times 15$, so the

bottom right corner is $(15,15)$. The coordinates of the robot are given

by getLocation. The coordinates of the target are given by

getTargetLocation. Both of these return a Point object (java.awt.Point), where the x

coordinate is given by p.x and the y by p.y where p is the Point

object.

Important notes

Only headings can be used as the argument for setHeading. Only

directions can be used as the argument for look and face.

IRobot is an interface, robot is an instance of an implementation of

IRobot.

CS126 - Design of Information Structures

These notes are a brief overview of the content for CS126.

Analysis of algorithms

A good algorithm will either be space or time efficient. There are two main ways to test the efficiency of algorithms - experimental and theoretical analysis.

Experimental analysis involves implementing and running an algorithm to determine how much time and space it takes to run. This type of analysis requires the algorithm to be implemented, all types of input to be tested and consistent system performance. In reality, it might not be possible to implement an algorithm, it's almost impossible to consider all types of input and different systems, even those with the same hardware and software, will still not necessarily have consistent run times.

Theoretical analysis uses mathematical methods to determine the asymptotic run time of an algorithm. It uses an abstract description of the algorithm as opposed to a concrete implementation and characterises the time or space complexity as a function of the input size. This allows analysis of an algorithm independent of hardware and software.

Asymptotic analysis

The running time of an algorithm can be split into three cases - best, worst and average. The best case is the minimum time an algorithm takes to run and is not that useful. The worst case is the maximum time an algorithm takes to run for any input. Average case determines how long it typically takes for an algorithm to run, somewhere between best and worst. This can be difficult to determine.

The best, worst and average case can be expressed using asymptotic notation. This ignores constant factors and lower order terms in a function to focus on the main part which affects its rate of growth.

Big-O notation

Big-O notation is used to characterise the worst case complexity of an algorithm. For two functions $f(n)$ and $g(n)$ it can be said that $f(n)$ is $O(g(n))$ if there exists $c>0$ and $N\geq1$ such that $$ f(n) \leq cg(n)\quad\forall n \geq N $$ Big-O notation gives an upper bound on the growth rate of a function as $n$ tends towards infinity. $f(n)$ is $O(g(n))$ means the growth rate of $f(n)$ is no more than that of $g(n)$.

Big-Omega

Big-Omega notation can be used characterise the best case complexity of an algorithm. Formally, $f(n)$ is $\Omega(g(n))$ if there exists $c>0$ and $N\geq 1$ such that $$ f(n) \geq cg(n) \quad \forall n \geq N $$ This is the opposite of big-O and gives the lower bound of the growth rate of $f(n)$.

Big-Theta

Big-Theta notation gives the average case complexity of a function. Formally, $f(n)$ is $\Theta(g(n))$ if there exists $c',c'' > 0$ and $N \geq 1$ such that $$ c'g(n) \leq f(n) \leq c''g(n) \quad \forall n \geq N $$ Big-Theta gives an upper and lower bound on the growth rate of $f(n)$ and is basically a combination of both big-O and big-Omega.

Examples

-

$2n+10$ is $O(n)$ because $$ \begin{aligned} 2n + 10 &\leq cn\\ (c-2)n &\geq 10\\ n &\geq \frac{10}{c-2} \end{aligned} $$ So the constants could be $c=3$ and $N = 10$ or anything else such that the inequality holds.

-

$n^2$ is not $O(n)$ because $$ \begin{aligned} n^2 &\leq cn\\ n &\leq c \end{aligned} $$ and this inequality can't be satisfied because $c$ is a constant.

-

$3n^3 + 20n^2 + 5$ is $O(n^3)$ because $$ \begin{aligned} 3n^3 + 20n^2 + 5 &\leq cn^3\\ 3 + \frac{20}{n} + \frac{5}{n^3} &\leq c \end{aligned} $$ which holds when $c = 4$ and $n \geq 21$ as $$ \frac{20}{n} + \frac{5}{n^3} \leq 1 $$ for all $n \geq 21$.

-

$5n^2$ is $\Omega(n^2)$ because $$ 5n^2 \geq cn^2 $$ for $c = 5$, $n \geq 1$.

-

$5n^2$ is $\Omega(n)$ because $$ 5n^2 \geq cn $$ for $c = 1$ and $n \geq 1$.

-

$5n^2$ is $\Theta(n^2)$ as it is both $O(n^2)$ and $\Omega(n^2)$.

-

$5n^2 + 3n\log n + 2n + 5$ is $O(n^2)$ because $$ 5n^2 + 3n\log n + 2n + 5 \leq (5+3+2+5)n^2 = cn^2 $$ when $c = 15$ and $n \geq 1$.

-

$3\log n + 2$ is $O(\log n)$ because $$ 3\log n + 2 \leq c\log n $$ when $c = 5$ and $n \geq 2$. Note that $n = 1$ will give $\log n = 0$ so $n \geq 2$ is necessary.

-

$2^{n+1}$ is $O(2^n)$ because $$ 2^{n+1} = 2 \cdot 2^n \leq c \cdot 2^n $$ when $c = 2$ and $n \geq 1$.

-

$3n\log n -2n$ is $\Omega(n\log n)$ because $$ 3n \log n - 2n = n\log n +2n(\log n - 1) \geq cn \log n $$ when $c = 1$ and $n \geq 2$.

-

$(n+1)^5$ is $O(n^5)$ because using the binomial expansion $$ (n+1)^5 = \binom{5}{5}n^5 + \binom{5}{4}n^4 + ... + 1 \leq cn^5 $$ when $c = \sum_{n=0}^5 \binom{5}{n}$ and $n \geq 1$.

-

$n$ is $O(n\log n)$ because $$ \begin{aligned} n &\leq cn\log n\\ 1 &\leq c\log n \end{aligned} $$ when $c = 4$ and $n \geq 2$.

-

$n^2$ is $\Omega(n\log n)$ because $$ \begin{aligned} n^2 &\geq cn \log n\\ n &\geq c\log n \end{aligned} $$ when $n \geq 1$ and $c = 1$.

-

$n \log n$ is $\Omega(n)$ because $$ \begin{aligned} n\log n &\geq cn\\ \log n &\geq c \end{aligned} $$ when $c=1$ and $n \geq 10$.

Conclusion

-

$f(n)$ is $O(g(n))$ if $f(n)$ is asymptotically less than or equal to $g(n)$

-

$f(n)$ is $\Omega(g(n))$ if $f(n)$ is asymptotically greater than or equal to $g(n)$

-

$f(n)$ is $\Theta(g(n))$ if $f(n)$ is asymptotically equal to $g(n)$

Recursion

See recursion.

Recursive methods are those that call themselves. A recursive method should have a base case and recursive case. For example, the factorial function has recursive case $f(n) = n \times f(n-1)$ and base case $f(0) = 1$.

Binary search is a recursive divide and conquer search algorithm for sorted data. It chooses a pivot and recursively searches the half of the list based on the difference between the search term and pivot. Binary search has complexity $O(\log n)$. Binary search is an example of linear recursion, where each recursive call makes only one recursive call.

The Fibonacci function, $F(n) = F(n-1) + F(n-2)$ uses binary recursion, as each call makes two recursive calls. A function making more than two recursive calls is said to use multiple recursion.

Data structures

Abstract data types and data structures

- An abstract data type (ADT) is a description of a data structure and its methods, like a Java interface but for a data structure instead of a class.

- A data structure is a concrete implementation of an ADT.

Arrays

Arrays are fixed size, contiguous blocks of allocated memory. Access is $O(1)$ and insertion is $O(n)$.

Linked lists

A linked list consists of nodes which point to the next node and possibly the previous as well.

Singly-linked list

Each node in a singly-linked list has a value and a pointer to the next node and not the previous. There is also a pointer to the head and tail of the list. Access, insertion and deletion are $O(n)$, although insertion and access at the head or tail is $O(1)$. Unlike arrays the linked list is dynamically sized.

Doubly-linked list

Each node points to the next and previous node. The doubly-linked list can be traversed forwards or backwards.

Stacks

A stack is a LIFO data structure. The stack ADT has pop and push methods

to remove the top element and insert an element at the top respectively.

Stacks can be implemented using arrays and each operation is $O(1)$ but

the size is fixed.

Queues

A queue is FIFO data structure. The ADT has enqueue and dequeue methods

for adding to and removing from the queue respectively. The queue can be

implemented with an array. All operations are $O(1)$, but the size is

fixed.

Lists

The list ADT has support for insertion and deletion at arbitrary

positions. It has size, set, get, add and remove methods. An array based

list needs to grow when its backing array gets full. The amortised time

(average time for each operation) of an insertion is better when

doubling the size than increasing the size by a constant factor.

A positional list is a list where the position of an item is relative to the others. This can be implemented with a doubly-linked list.

Maps

Maps are a searchable collection of key-value pairs. Maps support insertion, deletion and searching. Keys must be unique. The key is hashed and divided by the length of the backing structure to give the index of the value.

Hash collisions

Sometimes different keys will map to the same index. This is called a collision and there are two ways to deal with them. The first is separate chaining, where each location is a linked list and if two items have the same index then only the linked list has to be traversed to find it. The other solution is linear probing, where the item is placed in the next vacant adjacent cell. If the hash function does not distribute values well then both methods will become more inefficient. For separate chaining the list will become longer and take longer to traverse, for linear probing more indices will have to be traversed to find a vacant one. The best case for a map is $O(1)$, but with collisions on every element this can be as bad as $O(n)$.

Sets

A set is a collection of distinct, unordered elements. Sets support efficient membership tests and union, intersection and subtraction operations.

Sorting

Generic merge

The generic merge algorithm is used to sort two ordered lists.

public static <E extends Comparable<E>> E[] genericMerge(E[] a, E[] b){

E[] result = (E[])new Comparable[a.length + b.length];

int ri = 0, ai = 0, bi = 0;

// While both lists contain items

while(!(ai == a.length || bi == b.length)){

// Compare the current items

if(a[ai].compareTo(b[bi]) > 0){

// Add b to the result

result[ri++] = b[bi++];

} else{

// Add a to the result

result[ri++] = a[ai++];

}

}

// Add the remainder of a

while(ai < a.length){

result[ri++] = a[ai++];

}

// Add the remainder of b

while(bi < b.length){

result[ri++] = b[bi++];

}

// Return the result

return result;

}

Merge sort

Merge sort is a divide and conquer sorting algorithm. It takes the list, recursively splits it until left with singletons and then uses the generic merge algorithm above to merge the sorted lists.

private static <E> E[] mergeSort(E[] list){

int size = list.length;

if(size <= 1){

// Return singleton or empty list for merging

return list;

} else{

// Find middle index

int pivot = Math.floorDiv(size, 2);

// Create lists for two halves

Object[] left = new Object[pivot];

Object[] right = new Object[size-pivot];

// Copy list to halves

System.arraycopy(list, 0, left, 0, pivot);

System.arraycopy(list, pivot, right, 0, size-pivot);

// Recursively merge sort halves

left = mergeSort((E[])left);

right = mergeSort((E[])right);

// Return merged sorted lists

return genericMerge((E[])left, (E[])right);

}

}

Merge sort has time complexity $O(n\log n)$.

Quick sort

Quick sort is a randomised divide and conquer sorting algorithm. It chooses a random pivot, then splits the list into items smaller and larger than the pivot, then does the same for each of those lists until every item has been a pivot. The worst case of quick sort occurs when the random pivot is not ideal every time which has complexity $O(n^2)$. The average case is $O(n\log n)$.

Selection sort

Selection sort can be implemented using an unsorted priority queue. It

needs $n$ insertions and $O(n^2)$ removeMin calls, giving an overall run

time of $O(n^2)$.

Insertion sort

Insertion sort uses a sorted priority queue. It still has $O(n^2)$ run time but may perform better than selection sort.

Heaps and priority queues

Priority queue

A priority queue is a queue in which each element is assigned a

priority. The priority queue ADT has insert, removeMin and min methods

for insertion, removing the item with the lowest priority and accessing

the item with the lowest priority respectively. Priority queues can be

used for sorting by inserting elements with priority equal to value and

then removing.

Heap

A heap is a binary tree which stores keys and satisfies the heap-order property. The heap-order property states that every child node is less than or equal to it's parent. The first node in a heap is the root and the last node is the rightmost node of maximum depth. A heap storing $n$ items has height $O(\log n)$.

A priority queue can be implemented using a heap. Each node has the priority as a key where a smaller key means a higher priority and the root node holds the highest priority key.

An item can be inserted into a priority queue heap in the deepest, rightmost part of the heap. The heap order can then be restored using the upheap algorithm. This swaps the new node with its parent until it is smaller than its parent. This runs in $O(\log n)$.

The highest priority item will be the root. When the root is removed, the root is replaced with downheap algorithm. The largest child of a node is swapped with it until it is larger than all of its children.

A heap-based priority sort has run time $O(n \log n)$ because it needs $n$ calls of $O(\log n)$ for each insertion and $n$ calls of removeMin which takes $O(\log n)$ each.

Trees

A graph is a collection of nodes and edges. A tree is a connected, acyclic, undirected graph. This means there is a path between every pair of distinct nodes, there are no cycles and you can go either way along an edge. A binary tree is one in which every node has at most 2 children.

Traversals

Any tree can be traversed in 2 ways - pre-order and post-order. A binary tree can also be traversed in-order.

Pre-order

In a pre-order traversal, the nodes are visited from top to bottom, left to right.

algorithm preOrder(v)

print(v)

for each child w of v

preOrder(w)

Post-order

In a post-order traversal, the nodes are visited from bottom to top, left to right.

algorithm postOrder(v)

for each child w of v

postOrder(w)

print(v)

In-order

In a pre-order traversal, the nodes are visited from top to bottom, left to right.

algorithm inOrder(v)

if v.left exists

inOrder(v.left)

print(v)

if v.right exists

inOrder(v.right)

Decision trees

A decision tree is a binary tree in which each internal node is a question and each external node is an outcome. Decision trees can be used to find the lower bound for a sorting algorithm. If each external node in the tree is a possible ordering of the elements there must be $n!$ (from the definition of permutations) of them, giving a tree with height $\log(n!)$. This means any comparison based sorting algorithm takes at least $\log(n!)$ time. It can be shown that this means any comparison-based sorting algorithm must run in time $\Omega(n\log n)$, so $O(n \log n)$ is the most efficient comparison-based sorting algorithm time complexity.

Binary search tree

A binary search tree (BST) is a binary tree containing keys on the internal nodes and nothing on external nodes. For each internal node, the keys in the left subtree are less than its key and the keys in the right are greater than. An in-order traversal of the tree gives the keys in increasing order.

Search

To find a key in a BST, start at the root. If the key is equal to the root key, the item is found, if it is less than recursively search the left subtree, if it is greater than recursively search the right subtree. If an external node is reached the key is not found.

Insertion

Search the tree for the new key, then replace the external node with a new node containing the new key and give it two empty children.

Deletion

Search for the key to remove. If it has no internal children just replace it with an empty node. If it has one internal child, replace it with that child. If the node has two internal children, find the next node using an in-order traversal, then replace the removed node with it.

Performance

Space $O(n)$, height is best case $O(\log n)$ worst case $O(n)$, and search, insertion and deletion are $O(h)$ where $h$ is the height.

AVL trees

AVL trees are balanced binary trees. They are balanced regardless of insertion order. It achieves this using the height balance property, which states the difference in height between internal nodes and their internal children is at most 1. The height of an AVL tree storing $n$ keys is $O(\log n)$.

Rotations

Inserting into an AVL tree works the same as a binary tree, but if the insertion makes the tree unbalanced it needs to be rotated to balance it again. Rotations allow re-balancing the tree without violating the BST property.

Performance

AVL trees take $O(n)$ space and searching, insertion and removal take $O(\log n)$ time. A single restructuring takes $O(1)$ time with a linked-structure binary tree.

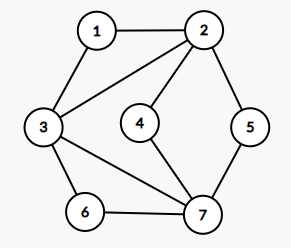

Graphs

-

A graph is a collection of nodes/vertices and edges.

-

Edges on a graph can be directed or undirected.

-

Vertices are adjacent if they are connected by an edge.

-

Edges are incident to a vertex if they are connected to it.

-

The degree of a vertex is the number of edges connected to it.

-

A path is a sequence of connected vertices and edges in a graph.

-

A cycle is a path where the start and end vertices are the same.

-

An connected, undirected, acyclic graph is a tree.

-

The sum of the degrees is twice the number of edges.

-

A subgraph is a graph which contains a subset of the edges and vertices in a graph.

-

A spanning subgraph is a subgraph which contains all vertices in the original graph.

-

A forest is a collection of trees.

-

A spanning tree of a connected graph is a spanning subgraph which is also a tree.

Graph traversals

Depth first search

Depth first search (DFS) is a graph traversal where the graph is traversed as deeply as possible, then across.

Breadth first search

Breadth first search (BFS) traverses across a graph, then down.

CS130 - Mathematics for Computer Scientists I

These are just my unedited lecture notes. I wasn't particularly good at CS130 so some of it is probably wrong.

Sets, sequences and functions

Introduction to sets

A set is a collection of objects. Sets are written in curly brackets. For example, the set of integers:

$$ \mathbb{Z} = \{0,1,-1, 2, -2, ...\} $$ To say an item belongs to a set you use $\in$, which means "belongs to" or "is a member of". For example, $12\in\mathbb{Z}$ because 12 is an integer.

The ordering of sets is not important. $\{1,0\}$ is the same as

$\{0,1\}$.

Sets with different numbers of the same item are the same. $\{1,1,1,2\}$

is the same as $\{1,2,2\}$ is the same as $\{1,2\}$.

To show one set is a subset of another, you can use $\subseteq$. For

example, $\{1,2,3,4\}\subseteq\mathbb{Z}$ as every item in $\{1,2,3,4\}$

is also in $\mathbb{Z}$.

Note that

$\mathbb{N}\subseteq\mathbb{Z}\subseteq\mathbb{Q}\subseteq\mathbb{R}$.

Also note that $\mathbb{Z}$ includes 0.

The number of items in a set (it's cardinality) $A$ is expressed as $|A|$. For example, $|\{1,2,3,4,5\}|=5$.

Specifying sets

You can specify a set using set builder notation. For example, the set of integers greater than 7 can be expressed

as $\{n\in\mathbb{Z}:n>7\}$, or the set of integers such that

$n > 7$.

The set of square integers can be expressed as $\{n^2:n\in\mathbb{Z}\}$.

This means the set of $n^2$ such that $n$ is an integer.

The set $\{(-1)^k:k\in\mathbb{Z}\}$ is the set of $(-1)^k$, such that

$k$ is an integer. For $k = 0$, $(-1)^0 = 1$, for $k = 1$,

$(-1)^1 = -1$, for $k = 2$, $(-1)^2 = 1$.

This pattern will repeat, so $\{(-1)^k:k\in\mathbb{Z}\}=\{1,-1\}$.

An empty set can be shown using $\emptyset$ or $\{\:\}$.

Sets with only one element ($|$set$| = 1$) are called singletons.

Finite sequences

Let $A = \{a, b, c\}$. $A^2 = \{(a,a),(a,b),(a,c),...,(c,c)\}$.

($(a,a)$ is a tuple that represents a sequence)

This can be generalised to $A^n =$ the set of all sequences of length

$n$ of elements of $A$.

If $A$ is finite, $|A^n| = |A|^n$.

The general form of $A^n$ is

$$ A^n = \{(a_1,...,a_n):a_1\in A, a_2\in A, ..., a_n\in A\} $$ This means the set of sequences from $a_1$ to $a_n$ such that $a_1$ to $a_n$ belong to $A$. For example, the set $\mathbb{Z}^2$ can be expressed as

$$ \mathbb{Z}^2 = \{(x,y):x\in \mathbb{Z}, y \in \mathbb{Z}\} $$

Introduction to functions

If there is a rule that assigns to each element $x\in X$ an

unambiguously determined element of the set Y, then it is a function

from X to Y.

This can be denoted as $f: X \to Y$.

$X$ is called the domain and $Y$ is called the co-domain.

Take $f(x) = x^2 - 3$ for example. For each value of $x$,

$x\mapsto x^2-3$. ($\mapsto$ means maps to).

This can then be expressed as $f:\mathbb{R}\to \mathbb{R}$, as each real

number is mapped to another real number.

Another example is $f(x) = \sqrt{x}$. This will map any positive real number to a positive real number, so $f:\mathbb{R}\geq 0 \to \mathbb{R} \geq 0$.

The left set, $X$, is the domain, and each item in the set ($x\in X$) is

shown to map to a value in the right set, $Y$, which is the co-domain.

It is possible for two items from $X$ to map to one item in $Y$.

This shows clearly how the function maps every item in set $X$ to set

$Y$.

It can be said that $f(x)$ is the image of $x$ under $f$ and that $f$

maps $X$ to $Y$.

More examples of functions

Consider the following functions

-

$f_1(x)=\frac{1}{x}$

-

$f_2(x)=\sqrt{x}$

-

$f_3(x) = \pm \sqrt{x^2+1}$

Which of these are functions from $\mathbb{R}$ to $\mathbb{R}$?

-

This is not a function from $\mathbb{R}$ to $\mathbb{R}$ as $f(0)$ is undefined, so there is not a mapping of every $x$ to some $f(x)$.

-

This is not a function from $\mathbb{R}$ to $\mathbb{R}$ as negative numbers map to complex numbers, which are not in the set $\mathbb{R}$.

-

This is not a function from $\mathbb{R}$ to $\mathbb{R}$, as it maps to two values, not one.

Logic

Introduction to propositional logic

A proposition is a statement that asserts something. Propositional logic abstracts propositions with propositional variables or atomic propositions. $p$ or $q$ are common variables for atomic propositions.

Compound propositions are made up of atomic propositions or other

compound propositions.

Some examples:

| Compound proposition | Name |

|---|---|

| $\neg p$ | negation (logical not) |

| $p \wedge q$ | conjunction (logical and) |

| $p \vee q$ | disjunction (logical or) |

Boolean functions and truth tables

Boolean functions are of the form $B^n \to B$, where

$B = \{True, False\}$.

Example:

$$

\begin{aligned}

& B^2 = \{FF,FT,TF,TT\}\\

& \text{A function that could map } B^2 \text{ to } B \text{ is } h(x,y) = x \vee y \text{ where } x,y \in B.

\end{aligned}

$$

You can draw a truth table to show the possible outputs

of a boolean function.

Here is the truth table for $x \vee y$:

| $x$ | $y$ | $f(x,y)$ |

|---|---|---|

| $F$ | $F$ | $F$ |

| $F$ | $T$ | $T$ |

| $T$ | $F$ | $T$ |

| $T$ | $T$ | $T$ |

$x \to y$ shows material implication. This means $x$ implies $y$. It is equivalent to $(\neg x) \vee y$.

| $x$ | $y$ | $x \to y$ |

|---|---|---|

| $F$ | $F$ | $T$ |

| $F$ | $T$ | $F$ |

| $T$ | $F$ | $T$ |

| $T$ | $T$ | $T$ |

Logical equivalence and laws of propositional logic

Boolean functions and logical equivalence

Propositions $P$ and $Q$ are logically equivalent if the proposition

$P \Leftrightarrow Q$ is a tautology.

The truth table for $x \Leftrightarrow y$:

| $x$ | $y$ | $x \Leftrightarrow y$ |

|---|---|---|

| $F$ | $F$ | $T$ |

| $F$ | $T$ | $F$ |

| $T$ | $F$ | $F$ |

| $T$ | $T$ | $T$ |

Both values have to be the same for $x \Leftrightarrow y$ to be true. $\Leftrightarrow$ shows equivalence.

A proposition is a tautology if it is true regardless of the values of

atomic propositions. A tautology is a proposition which is always true.

$P \equiv Q$ shows two propositions, $P$ and $Q$, are equivalent.

Laws of propositional logic

| Rule | Name |

|---|---|

| $(x\vee y)\vee z \equiv x \vee y\vee z)$ | associativity |

| $(x\wedge y)\wedge z \equiv x\wedge (y\wedge z)$ | associativity |

| $x \vee y \equiv y \vee x$ | commutativity |

| $x \wedge y \equiv y \wedge x$ | commutativity |

| $x \vee \text{False} \equiv x$ | identity |

| $x \wedge \text{True} \equiv x$ | identity |

| $x \vee x \equiv x$ | idempotence |

| $x \wedge x \equiv x$ | idempotence |

| $\neg (x \vee y) \equiv \neg x \wedge \neg y$ | De Morgan's law |

| $\neg(x \wedge y) \equiv \neg x \vee \neg y$ | De Morgan's law |

| $\neg (\neg x) \equiv x$ | double negation |

| $x \vee \neg x \equiv \text{True}$ | excluded middle |

| $x \wedge \neg x \equiv \text{False}$ | excluded middle |

| $x \vee (x \wedge y) \equiv x$ | absorption |

| $x \wedge (x \vee y) \equiv x$ | absorption |

| $x \wedge (y \vee z) \equiv (x \wedge y) \vee (x \wedge z)$ | distributivity |

| $x \vee (y \wedge z) \equiv (x \vee y) \wedge (x \vee z)$ | distributivity |

| $x \vee \text{True} = \text{True}$ | annihilation |

| $x \wedge \text{False} \equiv \text{False}$ | annihilation |

Alternative notation

Logical AND: $x \wedge y$, $x \& y$, $x \cdot y$, $xy$.

Logical NOT: $\neg x$, $\bar{x}$.

Predicates and quantifiers

Introduction to predicates and quantifiers

A predicate is a statement about a variable which can be true or false but not both. For example $p(u)$ is a predicate and $u$ is a variable.

Variables can be bound by quantifiers.

| Quantifier | Meaning |

|---|---|

| $\forall$ | "For all" |

| $\exists$ | "There exists" |

For example, $p(u): u > 2$ and $u<17$ is a predicate. For

$u \in \mathbb{Z}$, $p(0)$ is false and $p(15)$ is true.

$\exists u \;p(u)$ is true, as there exists an integer between 2 and 17,

whereas $\forall u \:p(u)$ is false as it is not true for all integers.

When a quantifier applies to a variable, the variable is said to be bound. For example, $p(x): x^2 = 2$ is a predicate and the variable $x$ is not bound, so it is free. If it is said that $\exists x \in \mathbb{Z} \; p(x)$ then the variable is now bound to a quantifier and is no longer free.

It is possible for predicates to have multiple variables. $p(x,y): x^2 - y^2 = (x-y)(x+y)$, for example, has 2 variables. Given that $x,y \in \mathbb{Z}$, It can be said that $\exists x \exists y \; p(x,y)$ and also $\forall x \forall y \; p(x,y)$ as the statement holds true for all integers $x$ and $y$.

Alternation of quantifiers

$q(u,v):|u-v|=1$, $u,v \in \mathbb{Z}$.

$\forall u \; \exists v \; q(u,v)$ is true, as for every integer $u$

there exists another integer $v$ with a difference of 1.

$\exists v \; \forall u \; q(u,v)$ is false, as there does not exist a

single integer $v$ which has a difference of 1 with every number $u$.

Despite having the same quantifiers, the order is important.

Laws of predicate logic

Quantification over finite sets

Consider a finite set of integers $A = \{1,2,3,4,5,...,n\}$.

$\forall x \in A\; P(x) \equiv P(1) \wedge P(2) \wedge ... \wedge P(n)$

and

$\exists x \in A \; P(x) \equiv P(1) \vee P(2) \vee ... \vee P(n)$ where

$P(x)$ is a predicate.

Another example:

$S = \{a,b,c\}$

$\forall x \; \exists y \; f(x,y)$

You can expand this out using the methods above:

$(\exists y \; f(a,y))\wedge(\exists y \; f(b,y))\wedge(\exists y \; f(c,y))$

Then applying the method again:

$(f(a,a) \vee f(a,b) \vee f(a,c)) \wedge$

$(f(b,a) \vee f(b,b) \vee f(b,c)) \wedge$

$(f(c,a) \vee f(c,b) \vee f(c,c))$

Working with quantifiers

$\forall x \; \text{True} \equiv \text{True}$

$\exists x \; \text{True} \equiv \text{True}$

$\forall x \; \text{False} \equiv \text{False}$

$\exists x \; \text{False} \equiv \text{False}$

$\forall x \; (P(x) \wedge Q) \equiv (\forall x \; P(x)) \wedge Q$

$\exists x \; (P(x) \wedge Q) \equiv (\exists x \; P(x)) \wedge Q$

These are interchangeable with $\vee$.

De Morgan's laws for quantifiers:

$\neg(\forall x \; P(x)) \equiv \exists x \; \neg P(x)$

$\neg(\exists x \; P(x)) \equiv \forall x \; \neg P(x)$

$\forall x \; (P(x) \wedge Q(x)) \equiv (\forall x\; P(x))\wedge (\forall x \; Q(x))$

This will not work for $\exists$, as $\exists x \; (P(x) \wedge Q(x))$

means there exists an $x$ such that both $P(x)$ and $Q(x)$ are true, but

if you try to split it like before you get

$(\forall x\; P(x))\wedge (\forall x \; Q(x))$ and there is a

possibility the values for $x$ will be different but still true for both

predicates.

There is a similar rule for disjunction ($\vee$). This only works with $\exists$ in both directions. $\exists x\; (P(x) \vee q(x)) \equiv (\exists x \; P(x)) \vee (\exists x \; Q(x))$. This will work with $\forall$ in the backward direction for the same reason as the last one.

Uniqueness

Suppose you want to find if only one item in a set satisfies a predicate. To express "at least one item satisfies the predicate" you can use $\exists x \in S\; P(x)$ - there exists an $x$ such that $P(x)$. This checks there is an item that satisfies the predicate, but not that it is the only one - there could be multiple.

To check it is unique, you can use $\forall y \in S \; \forall z \in S \; [(P(y)\wedge P(z))\to y = z]$. This says for all $y$ and $z$ which belong to $S$, if both satisfy the predicate it is implied that they are equal. Combining the checks for an item which satisfies $P(x)$ and is unique, you get $(\exists x \in S\; P(x)) \wedge (\forall y \in S \; \forall z \in S \; [(P(y)\wedge P(z))\to y = z])$.

The second part can be simplified using the previously mentioned rules. $(P(y)\wedge P(z)) \to y=z$ can be changed into $\neg P(y) \vee \neg P(z) \vee y=z$ because implication is logically equivalent to $\neg x \vee y$. Now we have $(\exists x \in S\; P(x)) \wedge (\forall y \in S \; \forall z \in S \; [\neg P(y) \vee \neg P(z) \vee y=z])$.

Algebra of sets

Set-theoretic operations

- $A \cup B$ is the union of $A$ and $B$.

- $A \cup B = \{x:x \in A \vee x \in B\}$.

- $A \cap B$ is the intersection of $A$ and $B$.

- $A \cap B = \{x:x \in A \wedge x \in B \}$.

- $A \setminus B$ is the set difference.

- $A \setminus B = \{x:x\in A \wedge x \notin B\}$.

- $A \bigtriangleup B$ is the symmetric difference.

- $A \bigtriangleup B = (A \setminus B) \cup (B \setminus A)$.

The laws of Boolean logic (associativity, distributivity, idempotence, commutativity, De Morgan's, etc.) also apply to sets, where $\cup$ is equivalent to $\vee$, $\cap$ is equivalent to $\wedge$ and an overline is used to show negation ($\overline{A}$).

Set difference proof

Prove $A \bigtriangleup B = (A\setminus B) \cup (B \setminus A) = (A \cup B) \setminus (A \cap B)$:

-

Let $x \in (A \setminus B) \cup (B \setminus A)$.

-

Consider the case $x \in A \setminus B$: $x \in A$ and $x \notin B$.

If $x\in A$ then $x \in A \cup B$. If $x \notin B$ then $x \notin A \cap B$. Combining the two expressions using the definition of $A \setminus B$ gives $x \in (A \cup B) \setminus (A \cap B)$. -

The same can be said when $x \in B \setminus A$:

$x \in B$, $x \notin A$, $x \in A \cup B$, $x \notin A \cap B$, $x \in (A \cup B) \setminus (A \cap B)$.

This has shown that $(A\setminus B) \cup (B \setminus A) \in (A \cup B) \setminus (A \cap B)$, but not the other way round.

-

-

Let $x \in (A \cup B) \setminus (A \cap B)$.

Using the set difference, $x \in (A \cup B)$ and $x \notin (A \cap B)$.

If $x \in (A \cup B)$, $x \in A$ or $x \in B$. Both can not be true or $A \cap B$ would be true, but $x \notin A \cap B$.

This means either $x \in A \wedge x \notin B$ or $x \in B \wedge x \notin A$.

Therefore, $x \in A \setminus B$ or $x \in B \setminus A$ by definition of set difference.

This has shown that $(A\cup B)\setminus (A \cap B) \in (A \setminus B) \cup (B \setminus A)$.

It has now been shown that both $(A\setminus B) \cup (B \setminus A) \in (A \cup B) \setminus (A \cap B)$ and $(A\cup B)\setminus (A \cap B) \in (A \setminus B) \cup (B \setminus A)$. Therefore the sets are equal.

Expressions with indices

To define multiple sets, where each is the set of integers from $i$, you can use $A_i = \{x \in \mathbb{Z} : x \geq i\}$. To express the union of all of those sets ($A_1 \cup A_2 \cup A_n$), you can use a big $\cup$, similar to series notation: $\displaystyle\bigcup_{i=1}^{n}{A_i}$ and similarly $\displaystyle\bigcap_{i=1}^{n}{A_i}$ for intersection. Similarly to how the sum of natural numbers can be expressed in terms of $n$, the same can be done for sets. Using $A_i = \{x \in \mathbb{Z} : x \geq i\}$ as an example:

| Notation | Value | Reason |

|---|---|---|

| $\displaystyle\bigcup_{i=1}^{n}{A_i}$ | $A_1$ | The intersection of all sets will be $A_1$ because it is the largest set. |

| $\displaystyle\bigcap_{i=1}^{n}{A_i}$ | $A_n$ | $A_n$ is the only set which is a subset of the sets before it, so is the value of the intersection. |

The values will change dependent on the definition of $A_i$.

It is also possible to make a general form for infinity:

$$ \begin{aligned} & \bigcup_{i=1}^{\infty} A_i = \{x:x \in A_i \text{ for some } i \in \mathbb{N} \}\\ & \bigcap_{i=1}^{\infty} A_i = \{x:x \in A_i \text{ for all } i \in \mathbb{N} \} \end{aligned} $$

Using $A_i = \{x \in \mathbb{Z} : x \geq i\}$ as an example again:

$$ \begin{aligned} & \bigcup_{i=1}^{\infty} A_i = A_1\\ & \bigcap_{i=1}^{\infty} A_i = \emptyset \end{aligned} $$ The union to infinity is the same as the the union to $n$. The intersection is different, as the set of integers not contained in the set of integers is the empty set.

Power sets and Cartesian products

Power set

The power set is the set of all subsets of a set.

| Set | Power set |

|---|---|

| $S_1 = \emptyset$ | $2^{S_1} = \{\emptyset\}$ |

| $S_2 = \{a, b\}$ | $2^{S_2} = \{\emptyset, \{a\}, \{b\}, \{a,b\}\}$ |

| $S_3 = \{1\}$ | $2^{S_3} = \{\emptyset, \{1\}\}$ |

In general, the power set of a set $S$ is denoted $2^S$ and is the set of all subsets of $S$. A power set of a finite set $S$ contains $2^{|S|}$ items.

Cartesian product and ordered pairs

The Cartesian product of two sets, $A \times B$, is the set of all ordered pairs $(a,b)$ where $a \in A$ and $b \in B$: $A \times B = \{(a, b) : a \in A, b \in B\}$.

Take two sets, $A = \{k,l,m\}$ and $B = \{q,r\}$. The Cartesian product of these sets would be $\{(k, q), (k, r), (l, q), (l, r), (m, q), (m, r)\}$ . Note that the first item in the sequences is from $A$ and the second is from $B$. For $B \times A$, the pairs will be swapped.

Proof

Direct proof

Direct proofs use assumptions to show something is true. Consider the statement "If $2^A \subseteq 2^B$ then $A \subseteq B$.". To prove this, we can take an arbitrary $x \in A$. It can then be said that $\{x\} \in 2^A$, as $\{x\}$ must be part of the power set of $A$. Since $2^A \subseteq 2^B$, $\{x\} \in B$, so $x \in B$. This shows that $A \subseteq B$ as $x$ is in both. This proof made use of assumption $2^A \subseteq 2^B$ to show $A \subseteq B$.

Using cases

Another way to prove something is by considering all the cases. Take the statement "$1+((-1)^n)(2n-1)$ is a multiple of 4 $\forall n \in \mathbb{Z}$". The value of $(-1)^n$ depends on whether the number is even or odd.

-

In the case the number is even, $(1+1(2n-1) = 2n$. As $n$ is even, we can substitute $n = 2k, k\in \mathbb{Z}$. This gives $4k$, which is divisible by 4.

-

In the case the number is odd, $(1-1(2n-1) = 1-2n+1 = 2-2n$. As $n$ is odd, $n = 2k-1$. This gives $2-2(2k-1) = 2-4k+2 = 4-4k = 4(1-k)$, so it's divisible by 4 when $n$ is odd.

As all $n\in \mathbb{Z}$ must be either odd or even, it has been shown that $1+((-1)^n)(2n-1)$ is a multiple of 4 $\forall n \in \mathbb{Z}$.

Proof by contrapositive

Proof by contrapositive uses the opposite of the statement to show that it isn't true, therefore the original statement was true. Consider "If $7x+9$ is even, then $x$ is odd." Assuming $x$ is even (opposite of the original statement), $x = 2m$. This gives $7(2m)+9 = 14m + 9 = 2(7m+4)+1$ which is always odd, so $7x+9$ is not even when $x$ is even. Therefore $x$ must be odd. Note this could also be proven by direct proof.

Proof by contradiction

Proof by contradiction assumes the opposite of something. Take the statement $\sqrt{2} \notin \mathbb{Q}$. Assuming $\sqrt{2} \in \mathbb{Q}$ (the opposite), it can be defined as a fraction of two integers in it's simplest form. $\sqrt{2} = \frac{m}{n}, m,n \in \mathbb{N} \setminus \{0\}$. Squaring both sides gives $2 = \frac{m^2}{n^2}$, so $2n^2 = m^2$. This means $m$ must be even, so let $m=2k$. $2n^2 = (2k)^2, 2n^2 = 4k^2, n^2 = 2k^2$. This means $n^2$ must be even, therefore $n$ must be even. If both $n$ and $m$ are even, then the fraction is not in it's simplest form. This contradicts $\sqrt{2} \in \mathbb{Q}$, so $\sqrt{2} \notin \mathbb{Q}$.

Whereas proof by contrapositive only takes the opposite of the last part of the statement, proof by contradiction takes the opposite of the whole statement.

Non-constructive proof

Theorem:

"$\exists \: x,y \in \mathbb{R} \setminus \mathbb{Q}:x^y \in \mathbb{Q}$"

(There exists an $x$ and $y$ which belong to the set of irrational

numbers such that $x^y$ is rational.)

Consider $a = \sqrt{2}^{\sqrt{2}}$ and $b = \sqrt{2}$. Therefore

$a^b = (\sqrt{2}^{\sqrt{2}})^{\sqrt{2}}=\sqrt{2}^{\sqrt{2}\times \sqrt{2}} = \sqrt{2}^2 = 2$.

This presents two cases:

-

If $a,b \in \mathbb{R} \setminus \mathbb{Q}$, then $x=a, y=b$. (If $a$ and $b$ are irrational, then it has been shown that $a^b$ is rational)

-

If $a \in \mathbb{Q}$ then $x=\sqrt{2}, y=\sqrt{2}$. (If $a$ turns out to be rational, then it is equal to $\sqrt{2}^{\sqrt{2}}$, where both are irrational)

Only one of the two cases can be true, 1 fails if 2 is true and vice versa, but both show that $x^y$ is rational when $x$ and $y$ are irrational, so the theorem is still proven regardless of which is true.

Logic in proof

Example 1

$$ \begin{aligned} x \wedge (\neg x \vee y) &\equiv x \wedge y \\ x \vee (\neg x \wedge y) &\equiv x \vee y \end{aligned} $$

Proof:

$$ \begin{aligned} \text{1. For all } x, y \in \{T, F\}^2, x \wedge (\neg x \vee y) &= (x \wedge \neg x) \vee (x \wedge y) & \text{(Distributivity)}\\ &= F \vee (x \wedge y) & \text{(Excluded middle)}\\ &= (x \wedge y) \blacksquare & \text{(Identity)}\\ \text{2. For all } x, y \in \{T, F\}^2, x \vee (\neg x \wedge y) &= (x \vee \neg x) \wedge (x \vee y) & \text{(Distributivity)}\\ &= T \wedge (x \vee y) & \text{(Excluded middle)}\\ &= (x \vee y) \blacksquare & \text{(Identity)}\\ \end{aligned} $$

Example 2

Let $A \to (B \to C)$, $\neg D \vee A$ and $B$ be given. Show that $D\to C$.

We want to show $P \to Q$ will always hold, where P is the conjunction

of the first 3 propositions and Q is $D \to C$. This will only not hold

when $P \wedge \neg Q$ is true. To show $P \to Q$ always holds, we have

to find the value of $P \wedge \neg Q$. If this is shown to be false,

then $P \to Q$ will always hold. Replacing $P$ and $Q$ with their values

gives

$(A \to (B \to C)) \wedge (\neg D \vee A) \wedge B \wedge \neg (D \to C)$

$A \to (B \to C) = A \to (\neg B \vee C) = \neg A \vee (\neg B \vee C)$

and $D \to C = \neg D \vee C$.

The equation is now

$(\neg A \vee (\neg B \vee C)) \wedge (\neg D \vee A) \wedge B \wedge \neg(\neg D \vee C)$.

Simplifying a little gives

$(\neg A \vee \neg B \vee C) \wedge (\neg D \vee A) \wedge B \wedge D \wedge \neg C$.

Using $x \wedge (\neg x \vee y) \equiv x \wedge y$ from earlier, we can

eliminate $\neg B$, $C$ and $\neg D$:

$(\neg A) \wedge A \wedge B \wedge D \wedge \neg C$.

$A \wedge \neg A$ is always false, so the whole statement is false.

Therefore $P \wedge \neg Q$ is always false. This means $P \to Q$ always

holds. This means $D \to C$ can never be false, so must hold.

$\blacksquare$

Relations

Introduction

A relation is a subset of the Cartesian product of two sets. For two sets $A$ and $B$, $R \subseteq A \times B$.

The equality relation for the set of integers can be expressed $R = \{(x,y) \in \mathbb{Z} \times \mathbb{Z} : x = y\}$. This is the set of pairs of equal integers.

The inverse of a relation, $R$, is the relation $R^{-1}$. This can be expressed as $R^{-1} = \{(b,a)\in B \times A : (a,b) \in \mathbb{R}\}$.

Because relations are sets, you can apply set theoretic operations to them.

Composition of relations

The composition of two relations, $R$ and $Q$ is shown as $R \circ Q$. Suppose $R$ is a relation between $a$ and $b$ and $Q$ is a relation between $b$ and $c$. $R \circ Q = \{(a,c) \in A \times C : \text{There is a } b \in B \text{ such that } (a,b) \in R, (b,c) \in Q\}$.

Properties of relations on a set

A relation $R$ on a set $S$ is reflexive if $aRa$ for every $a \in S$. This means the relation holds for every pair where both elements are the same.

A relation is symmetric if $aRb$ implies $bRa$ for all $a,b \in S$.

A relation is antisymmetric if $aRb$ and $bRa$ imply $a=b$. This means for a particular relation if $aRb$ and $bRa$ then $a = b$ must be true. For example, $R = \{(x,y) \in \mathbb{Z}^2 : x\leq y\}$ is antisymmetric because $aRb$ and $bRa$ only hold when $a =b$.

A relation is transitive if $aRb$ and $bRc$ implies $aRc$.

A relation is called an equivalence relation if it is reflexive, symmetric and transitive.

A relation is a partial order relation if it is reflexive, antisymmetric and transitive.

Equivalence relations

Equivalence relations and equivalence classes

Given a set $S$ which has an equivalence relation $R$, the equivalence

class, $[a]$, is $\{x \in S : aRx\}$. This means the set of values in

$S$ such that $a$ and $x$ are related by $R$.

As an example, given the set of integers $\mathbb{Z}$, $[5] = \{5\}$ as

it is the only value in the set equal to 5.

Given $R = \{(x,y) \in \mathbb{Z}^2 : x-y \text{ is even }\}$, $[5]$

would be $\{5, 3, 1, ..., 7, 9, ...\}$ or just the set of odd numbers,

as this is the set of numbers which when subtracted from 5 are even.

Representatives of equivalence classes

Any element from an equivalence class is called a representative of it.

For example, if $x \in [a]$, then $x$ is a representative of $[a]$.

Lemma 1 - Every equivalence class is generated by any of it's

representatives.

This can be expressed as $\forall b \in [a],\: [b]=[a]$.

Second lemma

Lemma 2 - $\forall a,b \in S$ either $[a] \cap [b] = \emptyset$ or $[a]=[b]$. This means for two representatives of a set, their equivalence classes are either equal or do not overlap.

Partitions

Sets $(A_i){i \in I}$ form a partition of a set $B$ if $\displaystyle\bigcup{i \in I}{A_i} = B$ and $A_i \cap A_j = \emptyset$ for all $i,j \in I, i \neq j$.

The quotient of $S$ with respect to relation $R$ is denoted by $S/R$, where $S/R = \{[a]_R : a \in S\}$.

Examples

Example 1: Let $S = \mathbb{Z}$ and $E = \{(x,x) : x \in \mathbb{Z}\}$.

$\mathbb{Z}/E=\{[a]_E:a \in \mathbb{Z}\} = \{\{x \in \mathbb{Z}:aEx\}:a \in \mathbb{Z}\}=$

$\{\{x\}:x \in \mathbb{Z}\}=\{\{0\},\{1\},...,\}$.

Example 2: Let $T = \{(x,y) : x,y \in \mathbb{Z}\}$. $\mathbb{Z}/T = \{\mathbb{Z}\}$?

Example 3: $S = \mathbb{Z} \times (\mathbb{Z} \setminus \{0\})$ and $R = \{((a,b),(c,d)): a \times d = b \times c\}$. $S/R = \mathbb{Q}$?

Functions

A relation $R \subseteq X \times Y$ is a function if for every $x \in X$ there is a unique $y \in Y$ such that $xRy$.

$f(x)$ is the value of $f$ at $x$. It is the image of $x$.

If $y = f(x)$, $x$ is a pre-image of $y$.

The complete pre-image of $y$ would be denoted $f^{-1}(y)$. This is

$\{x \in X : f(x) = y\}$.

The range of a function $f$ is the set $f(X)$ where $X$ is the domain.

$f(X) = \{f(x) : x \in X\}$.

Failure to be a function

A relation fails to be a function if

-

There is an item in the domain which is not mapped to the co-domain.

-

An item in the domain maps to more than one item in the co-domain.

Composition of functions

Given two relations $R \subseteq A \times B$ and $Q \subseteq B \times C$, $R \circ Q$ was their composition. This was the set of pairs from the Cartesian product of $A$ and $C$ such that they are linked by some $b \in B$.

Given two functions $f:X \to Y$ and $g:Y \to Z$, the composition , $f \circ g$ is also a function.

Properties of functions

A function is injective or one to one if each item in the co-domain is mapped to by exactly one item in the domain.

A function is surjective if every value in the co-domain is mapped to by an item in the domain.

A function is bijective if it is injective and surjective.

A function $f:X \to Y$ is bijective if and only if the inverse relation $f^{-1} \subseteq Y \times X$ is a function.

Countability and cardinality

Equinumerous sets

Two sets, $A$ and $B$, are equinumerous (same cardinality) if there is a bijection that maps $A$ to $B$. This is denoted $A \cong B$.

Finite, countable and uncountable sets

Consider

$F_1 = \{0\}, F_2 = \{0,1\}, F_3 = \{0,1,2\}, ... , F_n = \{x \in \mathbb{N} : x < n\}$.

$F_i \cong F_j$ if and only if $i=j$.

A set $S$ is finite if $S \cong F_i$ for some $i$.

A set is countably infinite is $S \cong \mathbb{N}$

A set is countable if it is finite or countably infinite.

$\mathbb{N}$ is used as the definition of countably infinite. Consider the set of all even integers - $\{x \in \mathbb{N}: x \text{ is even}\}$. This set is equinumerous to $\mathbb{N}$ because there exists a bijective function which maps $\mathbb{N}$ to the set. This function is $f(n) = 2n$.

The set of integers, $\mathbb{Z}$, is countably infinite. This can be shown by considering pairs of $(i,j)$ where $i$ is the "index" of $j$ in $\mathbb{Z}$. This would give $(0,0), (1,1), (2,-1), (3,2),...(i, j)$. Every $j \in \mathbb{Z}$ is given an index $i \in \mathbb{N}$. This is bijective as each $i$ maps to exactly one $j$ and there are no values of $j$ which do not have a value of $i$. Therefore $\mathbb{N} \cong \mathbb{Z}$.

If there is no bijective mapping from $\mathbb{N}$ to a set and it isn't finite, it is uncountable.

Cardinality of power sets

If $S$ is a set, $S \not\cong 2^S$. Given this, $2^\mathbb{N} \not\cong \mathbb{N}$. Therefore $2^\mathbb{N}$ is uncountable.

Graphs

A graph can be expressed a $G = (V, E)$, where $V$ is the set of vertices (nodes) and $E$ is the collection of edges (arcs). $E$ is not necessarily a set because there may be two edges between the same two vertices. In a directed graph, an edge could be represented $(u,v) \in E$ where $u,v \in V$. Because the edge is directed, the order of the pair is important. In an undirected graph the order of the pair is unimportant.

Adjacency, incidence, degrees and the handshaking lemma

A vertex is adjacent to another if they are connected by an edge.

An edge is incident to a vertex is it is joining it. For example, the edge between two vertices $a$ and $b$ is incident to $a$ and incident to $b$.

The degree of a vertex is the number of edges incident to that vertex. Note that if a vertex has a loop, then the loop is counted twice. In a directed graph, the in-degree of a vertex is the number of edges going into a vertex. The out-degree is the number of edges going out if the vertex.

The handshaking lemma states that the total degree of vertices in a graph is double the number of edges. Because of this, it is also true that an undirected graph has an even number of vertices of odd degree, as the sum of the degrees needs to be divisible by 2 (as the number of edges is half the total degree).

Isomorphism

An isomorphic graph is the same graph drawn differently. Two simple graphs, $G_1 = (V_1, E_1)$ and $G_2 = (V_2, E_2)$, are isomorphic if there exists a bijective function $f$ which maps $V_1$ to $V_2$ such that $\forall u,v \in V_1, (u,v) \in E_1 \Leftrightarrow (f(u), f(v)) \in E_2$. This means that both graphs must have the same edges between vertices.

Important graphs and graph classes

A graph with no edges is an empty graph.

A graph where every vertex is adjacent to every other is a complete

graph.

Graphs continued

Walks, paths, tours and cycles

A walk in a graph $G = (V,E)$ is a finite sequence

$V_0, (V_0, V_1), (V_1), (V_1, V_2), V_2, ...$ where $V_n$ is a vertex

and pairs of vertices represent edges.

A walk without repeated edges is a path. A simple path doesn't repeat

any vertex.

A tour is a walk which starts and ends at the same vertex.

A tour is a cycle if there are no repeated edges. A simple cycle has no

repeated vertices except the first/last one.

Moving along an edge from one vertex to another can be expressed as

$V_i \to V_j$. To express multiple edges, you can use $V_0 \to^* V_n$.

If a walk exists between $V_0$ and $V_n$, it can be said that $V_n$ is

reachable from $V_0$.

In an undirected graph, if $u \to^* v$, then $v \to^* u$, as if there

exists a walk from $u$ to $v$ then there exists a walk from $v$ to $u$.

It is symmetric.

In a directed graph, vertices are mutually reachable if there is a walk

between them in both directions.

Eulerian and Hamiltonian cycles

A cycle is Eulerian if it traverses every edge exactly once. A connected, undirected graph with even degree vertices and no isolated vertices has an Eulerian cycle.

A Hamiltonian cycle visits every vertex of a graph exactly once.

Planar graphs

A planar graph can be drawn without edges overlapping. If a graph is not planar, then it's subgraphs will not be planar either. If a subgraph is not planar, the whole graph will not be planar either.

Trees

Introduction

A tree is a connected graph with no cycles.

Spanning trees

A subgraph ($G' = (V, F)$) of a tree ($G = (V, E)$), is called a

spanning tree if it contains every vertex of the tree and is itself a

tree.

Removing an edge from a cycle will leave a connected graph.

Adding a new edge to a connected graph on the existing vertices will

create a cycle.

Every connected graph has a spanning tree.

Rooted trees

A rooted tree has a distinct root vertex from which all other vertices are connected.

Partial orders

As mentioned previously, a partial order is a relation which is reflexive, antisymmetric and transitive. If the $\leq$ relation on a set $S$ satisfies $\forall x,y \in S \quad x \leq y \vee y \leq x$, then it is a total order. If $x \in S: x \not\leq y \wedge y \not\leq x$, then $x$ and $y$ are incomparable.

Least/greatest elements, minima/maxima

For the $\leq$ relation on a set, the least element in a partial order

is $x \in P$ such that $\forall y:\: x \leq y$.

The greatest element is the opposite.

The minimal element is $x$ if $\forall y:\: y \leq x \to y=x$.

The maximal element is $x$ if $\forall y:\: y \geq x \to y=x$.

Hasse diagram

The Hasse diagram of the partial order $P_\leq$ is the directed graph

$G =(V,E)$ such that $V=P$ and

$P = \{(x,y):x < y \wedge \nexists z \in P \text{ such that } x<y \wedge z<y\}$.

This means $x$ and $y$ are adjacent. A dense partial order is where

there is no $(x,y)$ which satisfies the conditions. Therefore there is

no Hasse diagram.

All Hasse diagrams of partial orders are directed acyclic graphs because

of the properties of partial orders.

Probability

Consider a die. It has a sample space $\Omega = \{1,2,3,4,5,6\}$. Elements of the sample space are called elementary outcomes. An event is a subset of the sample space, $E \subseteq \Omega$. For example, $E = \{2,4,6\}$ is an event.

Random experiments

Consider flipping two coins. This is a random experiment. The sample space for a single flip is $\{H, T\}$. For two coins, this would be $\{HH, HT, TT, TH\}$, or more concisely, $\{H, T\}^2$.

The event for "first coin flipped is a head" is $E_1 = \{HH, HT\}$.

The event for "first coin equals second" is $E_2 = \{HH, TT\}$.

Another example is rolling three dice. The sample space is $\Omega = \{1,2,3,4,5,6\}^3$.

Another example is a hand of 5 cards. A single card has both a suit and a rank so the set of cards is $C = \{H,D,C,S\} \times \{A,2,3,...,J,Q,K\}$. The sample space for 5 cards is $\{A \subseteq C: |A| = 5\}$. $C^5$ is not correct as once a card is dealt it can't be dealt again.

Combinatorics

$|A \times B| = |A| \times |B|$.

A set of cardinality $n$ has $n!$ permutations.

An arrangement is like multiple permutations up to a given length. There are $n(n-1)...(n-k+1)$ elements in an arrangement of a set of size $n$ and length $k$.

A combination is a permutation where the order does not matter and the items are of a given size $k$. Where a permutation would include AB and BA, the combination only includes AB. The size of a combination of a set of size $n$ with items of length $k$ is the size of the equivalent arrangement divided by $k!$ which is $\frac{n!}{k! (n-k)!}$.

Assigning probabilities

The probability of an event $E$ is denoted $P(E)$.

A probability space is an ordered triple, $(\Omega, F, P)$ where

$\Omega$ is the sample space, $F$ is the collection of all events, given

by $2^\Omega$ as an event is a subset of $\Omega$ and $2^\Omega$ is all

subsets of $\Omega$. $P$ is the probability measure. $P$ is a function

from events to real numbers between 0 and 1 inclusive.

A fair coin would sample space $\{H, T\}$ with $P_H = 0.5,$ and $P_T = 0.5$.

Events

Probability of union

$P(A_1 \cup A_2 \cup ... \cup A_k) = \sum\limits^k_{i=i} P(A_i)$ if

$A_i \cap A_j = \emptyset$ and $i \neq j$.

$P(A \cup B) = P(A) + P(B)$ if $P(A \cap B) = 0$.

$P(A \cup B) = P(A) + P(\overline{A} \cap B)$.

Union bound

The union bound for two events is $P(A \cup B) \leq P(A) + P(B)$ and more generally $P(\bigcup^n_{i=1} A_i) \leq \sum^n_{i=1} P(A_i)$.

Probability of complement and product

$P(\overline{A}) = 1 - P(A)$ because $A \cup \overline{A} = \Omega$ and $A \cap \overline{A} = \emptyset$, so $P(\Omega) = P(A \cup \overline{A}) = P(A) + P(\overline{A})=1$ and as $P(A) + P(\overline{A}) = 1$, $P(\overline{A}) = 1 - P(A)$.

In general, $P(A \cap B) \neq P(A) \times P(B)$. When they are equal, the events are independent.

Conditional probability

Given a probability space $(\Omega, 2^\Omega, P)$, an event $B \in 2^\Omega$, $P(B) > 0$ and an event $A \in 2^\Omega$, the probability of $A$ given $B$ is denoted $P(A|B) = \frac{P(A \cap B)}{P(B)}$.

Law of total probability

$P(A) = \sum\limits^k_{i=1} P(A|B_i) \times P(B_i)$ where $B_1, ... ,B_k$ are all mutually exclusive events in a sample space and $A$ is any event in the sample space.

Bayes' theorem

$P(B_i|A) = \frac{P(A|B_i) \times P(B_i)}{P(A)}$

Events are independent if $P(A \cap B) = P(A) \times P(B)$.

Random variables

A random variable is a function from the sample space to the real numbers.

Bernoulli distribution

$$ X = \begin{cases} 1 & \text{if success}\\ 0 & \text{if failure} \end{cases} $$

For example, a coin flip has Bernoulli distribution with parameter $p$ where

$$ X(\omega) = \begin{cases} 1 & \text{if } \omega = t\\ 0 & \text{if } \omega = h \end{cases} $$

Binomial distribution

The binomial distribution can model $n$ coin flips. The outcome of each flip is independent and $P(t) = p$.

$$ X_i = \begin{cases} 1 & \text{if } i^\text{th} \text{ flip gives tails}\\ 0 & \text{otherwise} \end{cases} $$

This means $X_i$ has Bernoulli distribution with parameter $p$. The

Bernoulli distribution is the binomial distribution with $n=1$.

Let $X = X_1 + ... + X_n$. $X$ has binomial distribution with parameters

$n$ and $p$, where $n$ is the number of trials and $p$ is the

probability of success.

Given the Bernoulli distribution $X \sim{} \operatorname{Bi}(1, p)$:

| $x$ | 0 | 1 |

|---|---|---|

| $P(X=x)$ | $1-p$ | $p$ |

Given the binomial distribution $X \sim \operatorname{Bi}(3, p)$:

| $x$ | 0 | 1 | 2 | 3 |

|---|---|---|---|---|

| $P(X=x)$ | $(1-p)^3$ | $3(1-p)^2p$ | $3(1-p)p^2$ | $p^3$ |

Expectation

Take a random variable $X$ which only takes values in $\{0, 1, 2, 3\}$. The expectation of $X$ is defined as $\sum\limits_{i=0}^3\left(i \times P(X=i)\right)$, or $0 \times P(X=0) + P(X=1) + 2P(X=2) + 3P(X=3)$. If $X$ is a uniform distribution, $P(X=i) = \frac{1}{4}$ so the value of the expectation is $\frac{1}{4} + 2\left(\frac{1}{4}\right) + 3\left(\frac{1}{4}\right) = \frac{0 + 1 + 2 + 3}{4} = 1.5$.

In general, if the range of $X$ is

$\{a_1, a_2, ..., a_n\} \subseteq \mathbb{R}$ then the expectation of

$X$ is denoted

$EX = \sum\limits_{i=1}^n \left( a_i \times P(X=a_i)\right)$. This

definition works if the range of $X$ is finite.

Consider a roulette wheel with numbers 0 to 36, 18 black, 18 red and 0. If you bet on red or black and are correct you get double your bet, if you're wrong you get nothing and if you get 0 you get half of your bet. This can be represented with a sample space $\Omega = \{r, b, z\}$. $P(r) = P(b) = \frac{18}{37}$ and $P(z) = \frac{1}{37}$. The returns can be modelled with a random variable $R$. Suppose £1 is bet on black. This will give

$$ R = \begin{cases} 0 & \text{if } r \\ 2 & \text{if } b \\ 0.5 & \text{if } z \end{cases} $$

Therefore the expectation, $ER$, for this scenario is $P(r)R(r) + P(b)R(b) + P(z)R(z) = 2\left(\frac{18}{37}\right)+\frac{1}{37}\times\frac{1}{2} = \frac{73}{74} \approx 0.986$, so the return is approximately £0.99.

The expectation of the uniform distribution is

$\sum\limits_{k=1}^n\left(k \times \frac{1}{n}\right) = \frac{1}{n} \sum\limits_{k=1}^n k = \frac{1}{n} \times \frac{n(n+1)}{2} = \frac{n+1}{2}$.

The expectation of the Bernoulli distribution is

$0 \times (1-p) + 1 \times p = p$.

Expectation of sum and linearity of expectation

Given two random variables, $X$ and $Y$, the expectation of their sum, $E(X+Y)$ is the sum of their expectations, $E(X)+E(Y)$.

Expectation is linear, so $E(X+Y) = E(X) + E(Y)$ and $E(\alpha X) = \alpha E(X)$, $\alpha \in \mathbb{R}$. This is called the linearity of expectation.

Expectation of product

If random variables are independent then $E(X \times Y) = E(X) \times E(Y)$.

Let $X$ be a random variable with binomial distribution. $X = X_1 + X_2 + X_3...$ where $X_i$ is a Bernoulli distribution. Using the linearity of expectation, the expectation of a binomial random variable is $np$, where $n$ is the number of trials and $p$ is the probability of success.

Functions of random variables and variance

The expectation of $g(X)$ (where $X$ is a random variable) is given by $\sum\limits_{k}\left(k \times P(g(X) = k)\right)$ where $k$ is every value given by $X$. This can be rewritten as $E(g(x)) = \sum\limits_a \left(g(a) \times P(X=a) \right)$ where $a$ is every element of the sample space.

The expectation of $X^k$ is called the $k^\text{th}$ moment of $X$.

Let $m = EX$ and $g(x) = (x-m)^2$.

$E(g(X)) = E(X-m)^2 = E(X-EX)^2$, which is the variance of $X$. The

variance says how far the value of a random variable is from it's

expectation. The square root of the variance gives the standard

deviation. Using linearity of expectation it can be shown that the

variance is equal to $E(X^2) - (EX)^2$.

Markov's inequality

Let $X$ be a random variable, $X \geq 0$. Markov's inequality states that $\forall a \in \mathbb{R}_{>0}$ $P(X \geq a) \leq \frac{EX}{a}$.

Events and infinite sample spaces

The set of events in a probability space can be a subset of $2^\Omega$.

CS131 - Mathematics for Computer Scientists II

These are just my unedited lecture notes.

Number systems

Integers and Reals

Two integers $a$ and $b$ are congruent modulo $n>1$ if $a-b$ is an integer multiple of $n$ ( $a=b+kn$ for some $k \in \mathbb{Z}$). This can be written as $a \equiv b$ mod $n$ or $a \overset{n}{\equiv} b$.

Given any integers $a,n \in \mathbb{Z}$ with $n \neq 0$, there are unique integers $q,r \in \mathbb{Z}$ such that $a=qn+r$ and $0 \leq r < |n|$. $q$ is the quotient and $r$ is the remainder. The remainder is the smallest non-negative integer $b$ such that $a \equiv b$ mod $n$. The notation $a$ mod $n$ is used to denote the remainder.

Congruences with the same modulus can be added, subtracted and multiplied. If $x \equiv 3$ mod $n$ and $y \equiv 5$ mod $n$ then $(x+y) \equiv 8$ mod $n$ and $(x \times y) \equiv 15$ mod $n$.

Rational numbers

A rational number has the for $\frac{m}{n}$ where $m,n \in \mathbb{Z}$

and $n \neq 0$.

There is always a $m$ and $n$ such that $n \geq 1$ and

$\operatorname{gcd}(m,n)=1$.

Every non-zero rational number $q$ has an inverse $q^{-1}$ with the

product $q \times q^{-1}=1$.

Real numbers

Irrational numbers

An algebraic number is a real number (like $\sqrt{2}$) that is the solution of a polynomial equation with rational coefficients.

Transcendental numbers are real numbers which can't be the solutions of polynomial equations with rational coefficients such as $\pi$ and $\mathrm{e}$.

All the properties of the real number system can be derived from 13 axioms. These axioms hold $\forall x,y,z \in \mathbb{R}$. The first 6 axioms also hold for $\mathbb{N}$. The 7th holds for $\mathbb{Z}$. 8 to 12 hold for $\mathbb{Q}$. 13 only holds for $\mathbb{R}$.

-

Commutativity ($x+y=y+x$ and $x \times y = y \times x$)

-

Associativity ($x+(y+z)=(x+y)+z$ and $x(yz) = (xy) \times z$)

-

Distributivity of multiplication over addition ($x(y+z) = xy + xz$)

-

Additive identity (There exists 0 such that $x+0-x$)

-

Multiplicative identity (There exists 1 such that $x \times 1= x$)

-

The multiplicative and additive identities are distinct ($1 \neq 0$)

-

Every element has an additive inverse. There exists $(-x)$ such that $x+(-x)=0$.

-

Every non-zero number has a multiplicative inverse. If $x \neq 0$ then there exists $x^{-1}$ such that $x \times x^{-1}=1$.

-

Transitivity of ordering ($x<y$ and $y<z$ then $x<z$)

-

Trichotomy law. Exactly one of $x < y$, $y <x$ or $x=y$ is true.

-

Preservation of ordering under addition (If $x<y$ then $x+z<y+z$)

-

Preservation of ordering under multiplication (If $0<z$ and $x<y$ then $xz<yz$)

-

The axiom of completeness. Every non-empty subset that is bounded above has a least upper bound.

Upper and lower bounds, supremum and infimum

Let $S$ be a set of real numbers.

-

A real number $u$ is an upper bound of $S$ if $u \geq x$ for all $x \in S$.

-

A real number $U$ is the least upper bound (supremum) of $S$ if $U$ is an upper bound of $S$ and $U \leq u$ for every upper bound $u$ of $S$.

-

A real number $l$ is a lower bound of $S$ if $l \leq x$ for all $x \in S$.

-

A real number $L$ is the greatest upper bound (infimum) of $S$ if $L$ is a lower bound of $S$ and $L \geq l$ for every lower bound $l$ of $S$.

The completeness axiom says that every non-empty set of real numbers which has an upper bound has a least upper bound. From this we can derive that every non-empty set of real numbers which has a lower bound has a greatest lower bound.

::: center

From presentation slides

:::

The real numbers are completely characterised by 12 basic algebraic and order axioms and the completeness axiom. Any theorem about real numbers can be derived from these. Any structure satisfying these properties can be shown to be essentially identical to $\mathbb{R}$. The completeness axiom implies that $\{x\in \mathbb{R}\:|\:x^2 < 2\}$ has a least upper bound. It follows that there exists $x \in \mathbb{R}$ such that $x^2 = 2$.

Complex numbers

A complex number is of the form $a+ib$ where $a,b \in \mathbb{R}$ and $i = \sqrt{-1}$. The conjugate of a complex number $z$ is $\overline{z}$. For any $z,w \in \mathbb{C}$:

-

$\overline{z+w} = \overline{z} + \overline{w}$

-

$\overline{zw} = \overline{z} \times \overline{w}$

-

$\overline{z \div w} = \overline{z} \div \overline{w}$

-

$\operatorname{Re}(z) = \frac{z+\overline{z}}{2}$

-

$\operatorname{Im}(z) = \frac{z-\overline{z}}{2}$

The modulus of a complex number $a+bi$, denoted $|a+bi|$, is $\sqrt{a^2+b^2}$. For any $z \in \mathbb{C}$:

-

$|z| = |\overline{z}|$

-

$|z| = \sqrt{z\overline{z}}$

For any $z,w \in \mathbb{C}$:

-

$|zw|=|z||w|$

-

$|z+w|\leq |z| + |w|$

-

$||z|-|w|| = \leq |z-w|$

Any complex number $x+iy \neq 0$ can be expressed in polar coordinates. It uses the modulus $r = \sqrt{x^2+y^2}$ and argument $\theta = \arctan{(\frac{y}{x})}$, $-\pi < \theta \leq \pi$. This means $x+iy$ can be expressed as $r(\cos{\theta}+i\sin{\theta})$. $x=r\cos{\theta}$ and $y = r\sin{\theta}$.

De Moivre's theorem

$(\cos{\theta}+i\sin{\theta})^n = \cos{n\theta}+i\sin{n\theta}$.

Linear algebra

Vectors

Vectors in 2 and 3-dimentional space are defined as members of the sets $\mathbb{R}^2$ and $\mathbb{R}^3$ respectively.

The vector $\overrightarrow{OP}$ is the directed line segment starting at the origin and ending at the point $P$. Vectors with the same length and direction are equivalent.

The scalar or dot product of two vectors, $\mathbf{a}.\mathbf{b}$ is $|\mathbf{a}||\mathbf{b}|\cos{\theta}$ and rearranged $\cos{\theta} = \frac{\mathbf{a}.\mathbf{b}}{|\mathbf{a}||\mathbf{b}|}$. Two vectors are perpendicular if their dot product is 0.

Vectors in $n$ dimensions

An $n$-dimensional space $\mathbb{R}^n$ exists for any positive

integer.

Summation, scalar multiplication, modulus and dot product all work

similarly to 2 and 3 dimensions.

Linear combinations and subspaces

Linear combinations

Given two vectors $u$ and $v$ and two scalars $a$ and $b$ a vector of the form $au+bv$ is a linear combination of $u$ and $v$. Take $(5,3)$. This can be written as $5(1,0) + 3(0,1)$ or $1(2,0) + 3(1,1)$. $(5,3)$ is a linear combination of these.

To express (6,6) as a linear combination of (0,3) and (2,1), let $(6,6) = \alpha(0,3) + \beta(2,1)$. Expanding this gives $(6,6) = (2 \beta, 3 \alpha + \beta)$. Setting $2\beta =6$ and $3 \alpha + \beta = 6$ and solving gives $\alpha = 1$ and $\beta = 3$. Therefore $(6,6) = 1(0,3)+3(2,1)$.

Linear combinations also exist for $n$-dimensional vectors. To express $(3,0)$ as a linear combination of $(1,1)$, $(1,0)$ and $(1,-1)$ in multiple different ways let $(3,0) = \alpha(1,1) + \beta(1,0) + \gamma(1,-1)$ which gives $(3,0) = (\alpha + \beta + \gamma, \alpha - \gamma)$. Solving gives $\alpha = \gamma$ and $\beta = 3 - 2\gamma$. Then any value can be chosen for $\gamma$ to give different linear combinations, such as $\gamma = 0$ gives $\alpha = 0$ and $\beta=3$.

Given non-parallel vectors $u$ and $v$ the vector $\alpha u + \beta v$ is the diagonal of the parallelogram with sides $\alpha u$ and $\beta v$.

Let $u = (1,0,3)$, $v = (0,2,0)$ and $w = (0,3,1)$.

The linear combination

$2u+3v+4w = 2(1,0,3) + 3(0,2,0) + 4(0,3,1) = (2,18,10)$.

It is not possible to express $(1,5,4)$ as a linear combination of $u$,

$v$ and $w$ because when solving the equations the solutions are not

consistent.

Span

If $U = \{u_1 + u_2, ..., u_m\}$ is a finite set of vectors in $\mathbb{R}^n$ then the span of $U$ is the set of all linear combinations of $u_1, u_2,..., u_m$.

If $U = \{u\}$ then span

$\{u\} = \{\alpha u\:|\:\alpha \in \mathbb{R}\}$.

If $U = \{(1,0),(0,1)\}$ then the span of $U$ is $\mathbb{R}^2$. Any

arbitrary vector $(x,y)= x(1,0) + y(0,1)$.

Subspace

A subspace of $\mathbb{R}^n$ is a nonempty subset $S$ of $\mathbb{R}^n$ with two properties. The first is closure under addition, which means $u,v \in S \rightarrow u+v \in S$ and the second is closure under scalar multiplication which means $u \in S, \lambda \in \mathbb{R} \rightarrow \lambda u \in S$.

If $S$ is a subspace and $u_1, u_2, ..., u_m \in S$ then any linear combination of $u_1, u_2, ..., u_m$ also belongs to $S$. Two simple subspaces of $\mathbb{R}^n$ are the set containing only the zero vector and $\mathbb{R}^n$ itself.

Linear Independence

A set of vectors $\{u_1,u_2,...,u_m\}$ in $\mathbb{R}^n$ is linearly

dependent if there are numbers

$\alpha_1,\alpha_2,...,\alpha_m \in \mathbb{R}$, not all zero such that

$\alpha_1 u_1 + \alpha_2 u_2 + ... + \alpha_m u_m = 0$.

A set of vectors is linearly independent it is not linearly dependent

($a_i = 0$ for all $i$).

$\{(1,2,3),(1,-1,-1),(5,1,3)\}$ is linearly dependent. $\alpha(1,2,3) + \beta(1,-1,-1) + \gamma(5,1,3) = 0$. This gives $\alpha + \beta + 5 \gamma=0$, $2\alpha - \beta+\gamma$ and $3\alpha -\beta +3\gamma$. Solving these gives $\alpha = -2\gamma$ and $\beta = -3\gamma$. Let $\gamma \neq 0$. This means $\alpha$ and $\beta \neq 0$, so they are linearly independent.

Basis

Given a subspace $S$ of $\mathbb{R}^n$, a set of vectors is called a basis of $S$ if it is a linearly independent set which spans $S$. The set $\{(1,0,0), (0,1,0),(0,0,1)\}$ is a basis for $\mathbb{R}^3$.

Standard basis

In $\mathbb{R}^n$, the standard basis is the set

$\{e_1, e_2, ..., e_n\}$ where $e_r$ is the vector with $r$th component

1 and all other components 0.

The standard basis for $\mathbb{R}^5$ is $\{e_1, e_2, e_3, e_4, e_5\}$

where

$$ \begin{aligned} e_1 &= (1,0,0,0,0)\\ e_2 &= (0,1,0,0,0)\\ e_3 &= (0,0,1,0,0)\\ e_4 &= (0,0,0,1,0)\\ e_5 &= (0,0,0,0,1) \end{aligned} $$

If the set $\{v_1, v_2, ..., v_m\}$ spans $S$, a subspace of $\mathbb{R}^n$, then any linearly independent subset of $S$ contains at most $m$ vectors.

Dimension

The dimension of a subspace of $\mathbb{R}^n$ is the number of vectors in a basis for the subspace. Since the standard basis for $\mathbb{R}^n$ contains $n$ vectors it follows that $\mathbb{R}^n$ has dimension $n$.

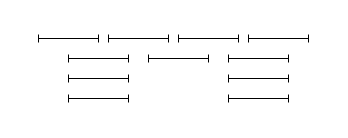

Coordinates